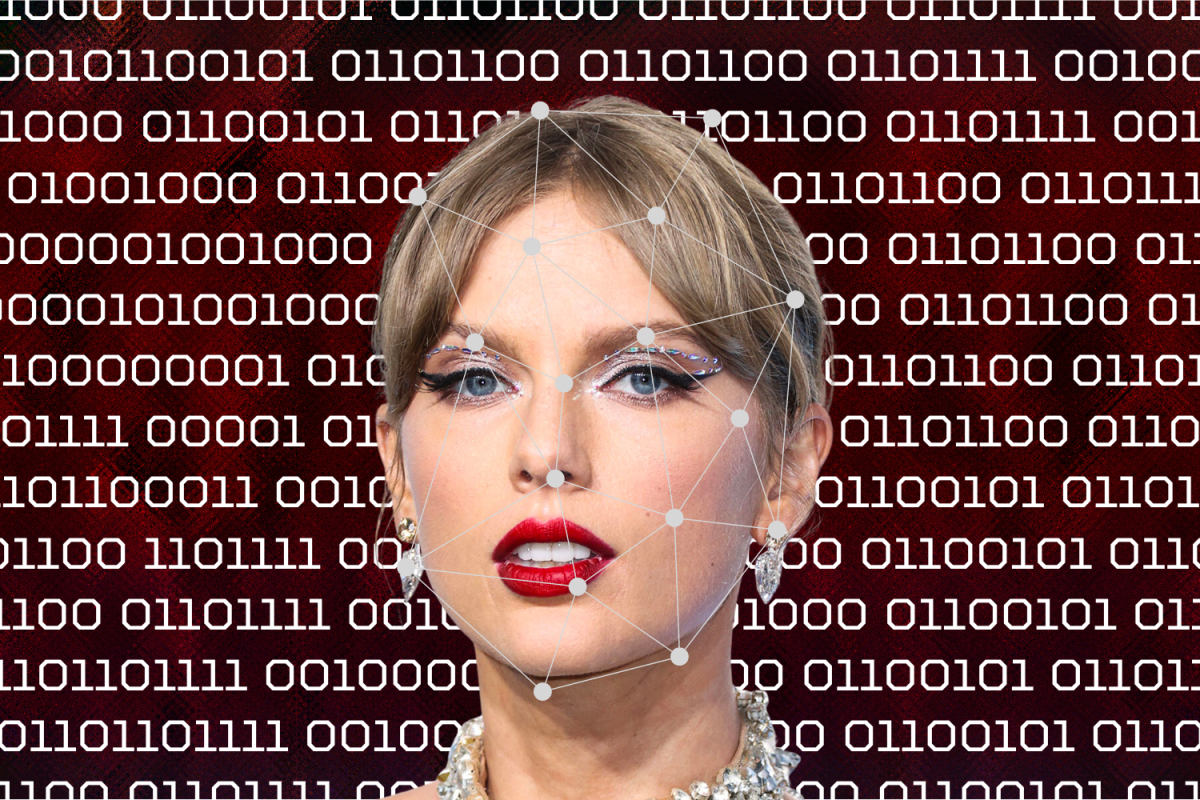

X, formerly known as Twitter, bans searches for Taylor Swift after explicit deepfakes go viral, raising awareness for advancing deepfake technology.

On Jan. 28, deepfakes of explicit Taylor Swift AI-generated images went viral on X in a series of posts. For one day, in an attempt to block these searches, X banned searches for Taylor Swift.

A deepfake is a machine learning model that takes a person’s photographic likeness and portrays events that never occurred. According to Encyclopædia Britannica, this term came to be in 2017 on a Reddit page for face-swapping celebrities, a portmanteau of fake and deep learning.

Mario Peedor, founder of Lip Synthesis, an AI-generated synthetic media educational company, says that five years ago, deepfake technology was nowhere as developed as the process today.

“The first step is to capture the face of the person. The second step is to manipulate the lips. We have built a model out of hundreds of thousands of people,” Peedor said.

Lip Synthesis, like many other deepfake companies, uses a model that is trained on many faces.

“The model is called a neural network, which checks 100,000 different people how they speak. It takes different races and different languages. This general model uses lip-synching technology to try to match the lips. The results are nowhere near perfect yet, but it’s getting very, very close,” Peedor said.

Afterwards, the network tries to get the personal likeness of the person they are trying to deepface, according to Peedor. If there are moles or birthmarks, they try to add them in.

According to Peedor, a mask of the prepared face is applied to the video in the end.

“It’s not really changing your face. Your face will become a mask, and your old face is still in the background, but we will apply the mask on top of your original face,” Peedor said.

Through this process, Lip Synthesis can create many videos a day. However, some of these requests are vetted to ensure that the product is for educational purposes and is non-defamatory.

“Our software generally creates 100 videos per day, but we get requests for at least 300 per day. And we can’t allow them because they are connected to marketing fraud,” Peedor said.

Besides ethical considerations of deepfakes, these misleading content of real-life people also have a significant impact on society, according to Timothy Redmond, instructor in media studies at UC San Francisco and political and investigative reporter.

“For example, with celebrities like Taylor Swift, people are making fake nude images of her and putting them online, and that’s really dangerous because it hurts peoples’ reputation,” Redmond said.

According to Redmond, the deepfake that happened to Swift raises awareness of media literacy, especially regarding deepfake content.

“I do think that the celebrity (status) of Taylor Swift and the fact that they had the halt searches, and the news of that spreading is going to help people understand how dangerous this technology can be,” Redmond said.

Other than celebrities, creating explicit images or videos of people has an extreme impact, according to Nolan Higdon, lecturer in history, media studies, and education at UC Santa Cruz.

“We fixate on the wealthiest, most famous people, and we act like working people, and average people don’t matter. I’d like a few more conversations about how deepfakes are affecting those people’s lives,” Higdon said.

According to the South Asia Times, in Pakistan, digitally edited graphic photos of a girl led to subsequent shaming.

“The problem is that the technology has gotten so good so fast that we aren’t very good as a society and figuring out how to respond. But technology has advanced faster than our ability as a society to address it,” Redmond said.

Jeff Share, a UC Los Angeles lecturer, says that people must develop a degree of media literacy to combat the spread of deepfakes.

“Disinformation or so fake news has basically been around for as long as humans have been around. And with each new technology to forge new opportunities to create more complex or sophisticated disinformation,” Higdon said.

Redmond says that before laws are passed to protect people from dangerous deepfakes, people have a responsibility to check their news sources.

“You have a responsibility to go out of your way to check the authenticity of what you see and to not believe everything that you see on social media,” Redmond said.

According to Higdon, in 2018, when a deepfake video of former President Barack Obama was published on YouTube, it was discernibly fake. However, in today’s landscape, it may be hard to tell the difference between real and fake.

“Nowadays, with information being so easy to spread and so easy to manipulate, we need to be far more conscious about everything we’re hearing and learning,” Share said.

Aside from vetting your content intake, Share says it’s also important not to spread disinformation.

“A lot of times, someone creates something on purpose that’s false. Then, other people don’t know whether it’s false, so they reshare it. Then, what they’re doing is creating misinformation. So you have disinformation and misinformation, creating an atmosphere where it’s very difficult to know what’s real,” Share said.

Higdon suggests that people should identify general news outlets and journalists who have a long history of getting things right and try to follow them. He also believes that the people, not the government, should be the ones to determine what is right.

“We shouldn’t empower those entities to determine what the truth is. We need to spend more time looking at what we can do as citizens and try to give less power to the government or industry to determine what’s true and what’s false for us,” Higdon said.

On the other hand, Share believes that the government should regulate the spread of misinformation.

“It shouldn’t be okay to say outright lies and racist commentaries out on the internet without any type of regulation or oversight because people get hurt,” Share said.

Ultimately, like any technological advancement, there are positives and negatives to deepfakes.

“Disinformation and bullying and things like that are bad, but who knows what good usage is on the horizon?” Higdon said.

Peedor says that in the future, deepfake models can be used to translate businesses’ and companies’ advertisements to another language to expand in the market.

“Any technological innovation in itself isn’t necessarily good or bad. It’s how we use it. It’s how we shape it. It’s how we imagine it,” Higdon said.