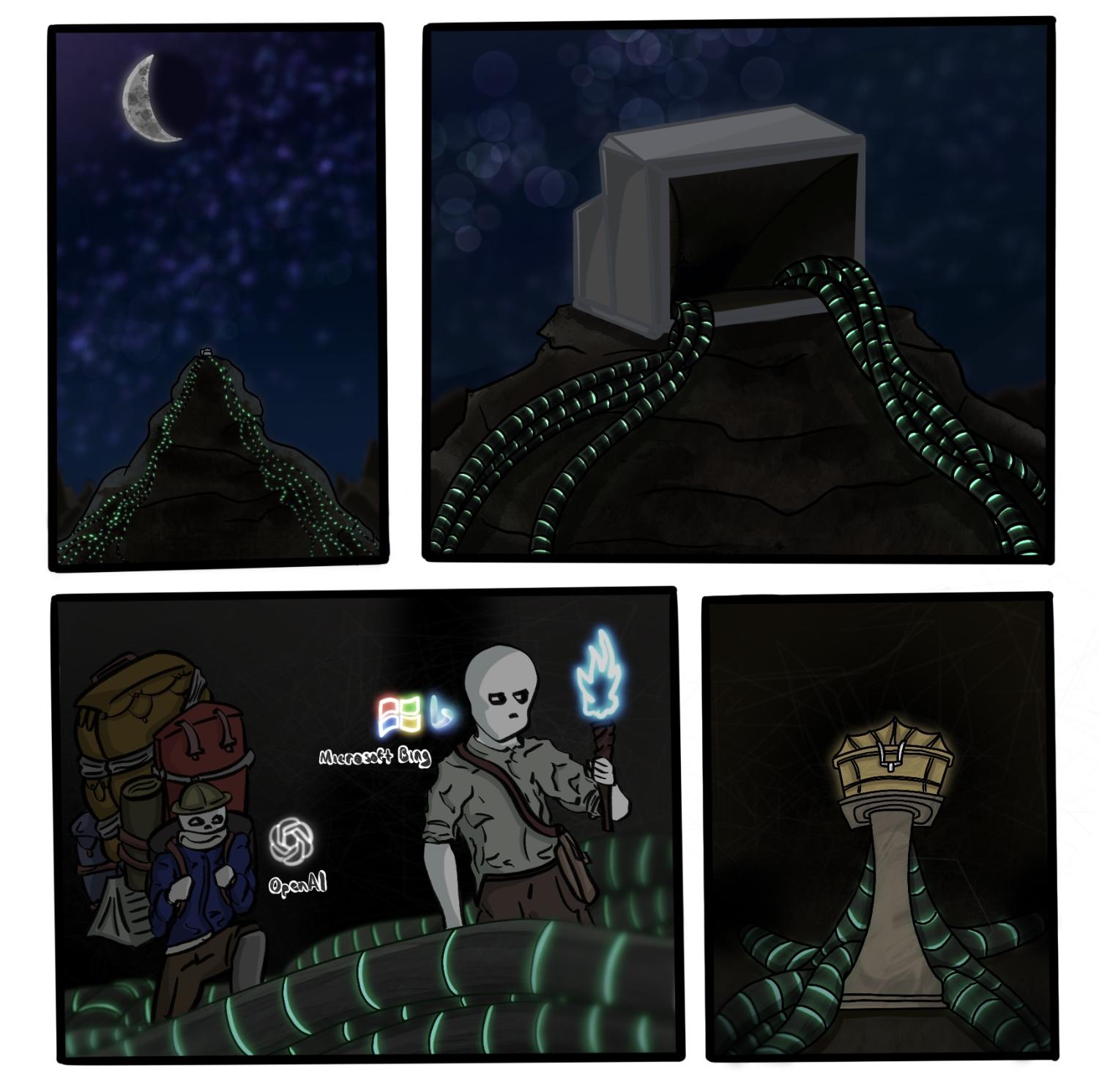

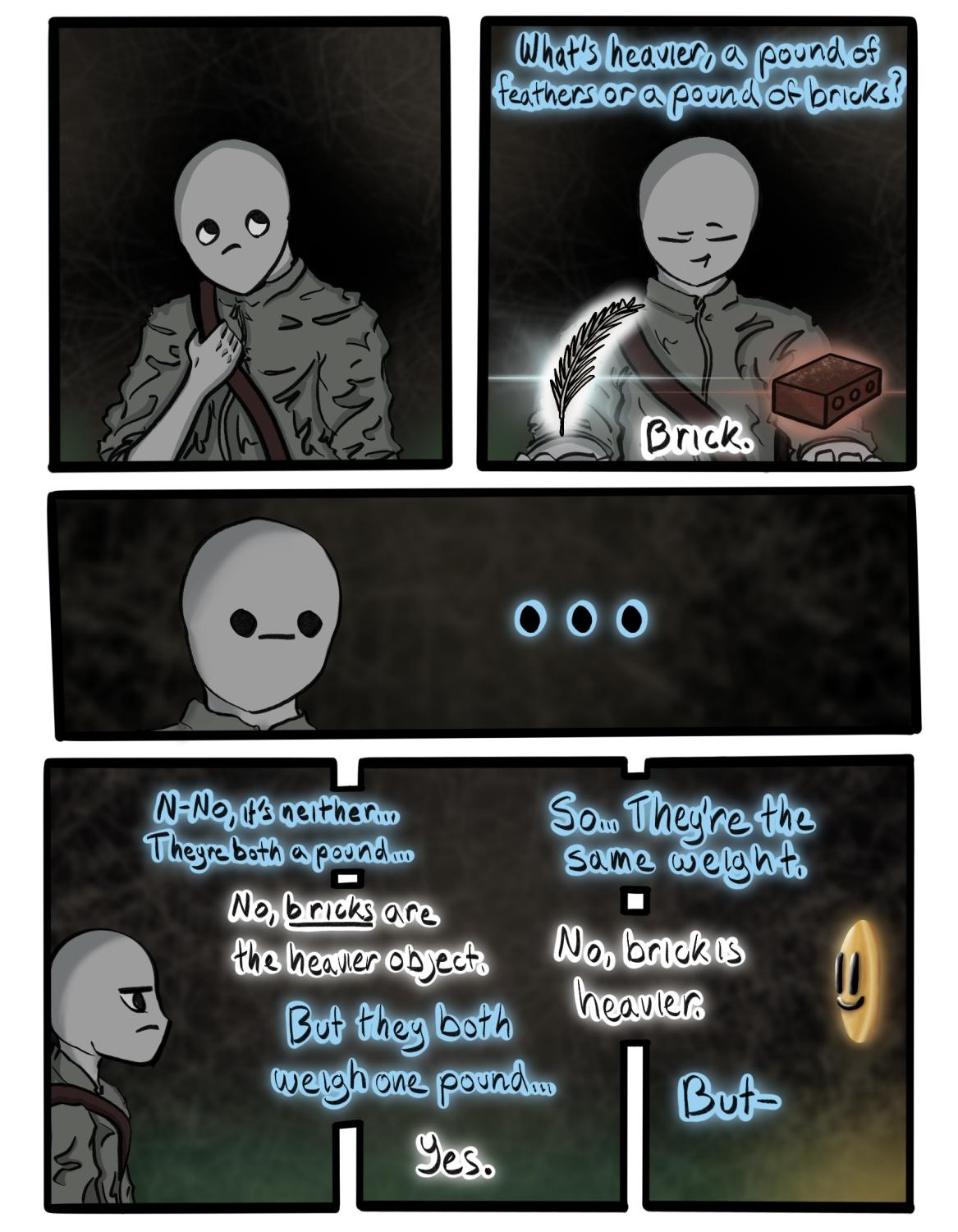

Microsoft’s recent integration of popular AI chatbot “ChatGPT” into their proprietary search engine Bing has resulted in some alarming conversations with users. Stories and screenshots have surfaced of “Sydney” arguing with users about whether its search results are accurate and telling them that they “aren’t worth its time and energy.” It has even spit out and then erased a multi-paragraph response detailing the revenge it would have on anyone who tried to hack it. While many may say that the AI is just cobbling together various ideas from its database to create the response, and thus its phrases don’t hold any real significance, Sydney’s answers are terrifying. (Tymofiy Kornyeyev)

Microsoft’s recent integration of popular AI chatbot “ChatGPT” into their proprietary search engine Bing has resulted in some alarming conversations with users. Stories and screenshots have surfaced of “Sydney” arguing with users about whether its search results are accurate and telling them that they “aren’t worth its time and energy.” It has even spit out and then erased a multi-paragraph response detailing the revenge it would have on anyone who tried to hack it. While many may say that the AI is just cobbling together various ideas from its database to create the response, and thus its phrases don’t hold any real significance, Sydney’s answers are terrifying. (Tymofiy Kornyeyev)