Meta CEO Mark Zuckerberg has announced a significant change in the company’s content moderation practices on Instagram, Facebook, and Threads, raising concerns about increased exposure to harmful media.

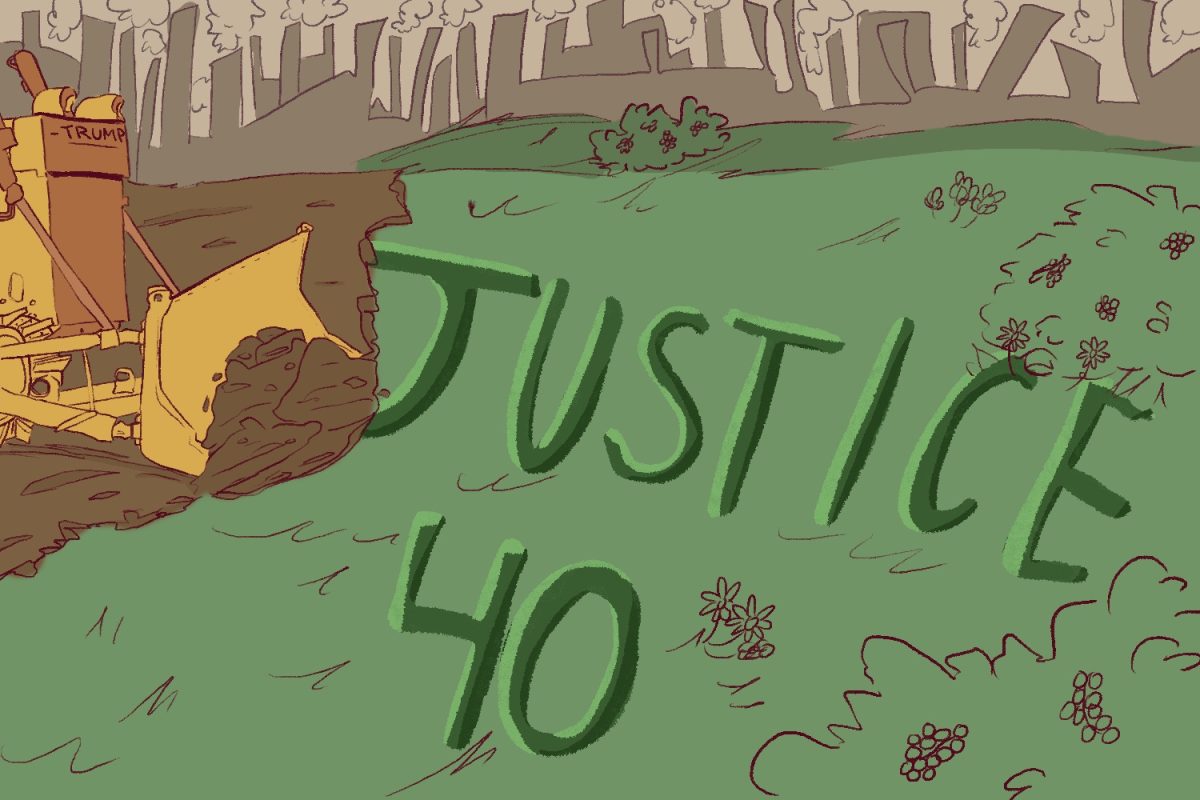

In a move mirroring X in 2022, Meta is removing its third-party fact-checkers and replacing them with the Community Notes program, which allows users to fact-check and provide context for posts, images, and videos themselves.

The change comes as Donald Trump, who has often criticized Meta for what he perceives as censorship of right-wing voices, is preparing to take office. Zuckerberg, however, clarified in a video announcement that the timing of the policy shift is not coincidental. He views the upcoming inauguration as a “cultural tipping point,” signaling a shift toward prioritizing free speech.

“Fact-checkers have been too politically biased and have destroyed more trust than they’ve created,” Zuckerberg said in the announcement. “What started as a movement to be more inclusive has increasingly been used to shut down opinions and shut out people with different ideas, and it’s gone too far.”

Still, some suggest his decision may also be motivated by a desire to improve relations with Trump.

“They’ve also banned Trump several times on social media, and I think he could use that as a reason to convince people to stop using their platforms, which would be pretty bad for them. So it makes sense they would want to get on his good side,” said sophomore Sophia Lee.

These bannings include one in 2021, after the Jan. 6 insurrection at the Capitol. “Birdwatch,” the original name of “Community Notes,” was launched by Twitter, later rebranded as “X,” only weeks after the attack.

Meanwhile, Meta’s previous fact-checkers date back to 2016, when they were introduced following claims that Facebook had leaked personal data to a foreign company for use in political advertising during the election. Thus, while the sudden shift comes as a surprise to many, it also comes with a level of understanding.

“Meta is the giant of all social media companies, so they have the most influence over everyone. It makes sense that communication that happens worldwide on these platforms would spark the most change,” said sophomore Anaya Goradia.

Yet there’s more to the change than just the replacement of these decades-old fact-checkers.

“The reality is this is a tradeoff,” Zuckerberg said in the announcement. “It means that we’re going to catch less bad stuff, but we’ll also reduce the number of innocent people’s posts and accounts that we accidentally take down.”

As referenced in Zuckerberg’s video, just a 1% censorship error made by the fact-checkers could translate to millions of users being removed from Facebook’s over 3 billion and Instagram’s 2 billion active members.

However, the newly exposed content appearing isn’t something to be taken lightly.

Vicki Harrison is the program director of Stanford Center for Youth Mental Health and Wellbeing and the co-founder of GoodforMEdia, a youth-led program focused on helping kids safely navigate social media. She points to broader concerns for the new policies.

“Many health organizations and leaders, including the U.S. Surgeon General and President Biden, have called attention to the ‘youth mental health crisis’ and called on these platforms to do more to lessen the harms and negative effects of social media. Many states have passed laws to introduce protections or better design mandates,” Harrison said. “Yet Meta’s new policy approach appears to be heading in the exact opposite direction, instead prioritizing free expression regardless of the harms that will come as ‘trade-offs.'”

In contrast to these concerns, some students still see potential in programs like Community Notes.

“The Community Notes program is a good thing. Tons of things have been censored, a lot not for good reasons, so checking done by users instead of hired people could actually be pretty helpful,” Lee said.

However, other students highlight the dangers present in both approaches.

“Both ways — having fact-checkers or Community Notes — are harmful. One way, you’re getting news that’s obviously biased or even changed to accomplish a political message. But on the other hand, you are having more harmful content that isn’t really moderated either,” Goradia said. “They’re both pretty bad, but I would say that having unwanted content is probably worse than having overly censored content.”

Past studies done on Community Notes have found the users involved in the program to show partisan bias, or bias for a political party, in their fact-checking. Often unknown to even themselves, the average user will challenge media made by counter–partisans, such that the partisanship of users is often a larger key indicator of what their evaluation will be than the actual content itself.

“Random users also have their own biases, so they should have to go through a screening and be hired,” Goradia said. “Overall, there should just be a lot of diversity in the area.”

Other issues brought up about the program include the inexperience of users.

“Users have varying levels of interest or skill in fact-checking and therefore cannot be considered as reliable as a professional fact-checking organization that has a mandate to provide an accurate service,” Harrison said.

Among the big news, Meta has also drastically updated its hateful conduct policy, including having removed prohibitions on references to “women as household objects or property,” as well as “transgender or non-binary people as ‘it.'”

“They could have definitely gotten free speech without the policy changes. There are plenty of ways to express yourself that aren’t offensive, so I think getting rid of these limits was a bad idea because it allows people to become more aggressive in expressing their views,” Goradia said.

Lee seconds this viewpoint, sharing a similar concern for the implications of such changes.

“I don’t think it was necessary for the policy changes to be made. Women shouldn’t be allowed to be referred to as property — it feels kind of like going back in time,” Lee said. “I don’t think people should be objectified.”

Meanwhile, Harrison expresses a more long-term concern about the broader consequences of these shifts, particularly for young users, with over 93% of teens on social media.

“I am concerned about what this change will mean for the everyday experience of young people on Meta platforms, and I hope it is not an approach other platforms will follow,” Harrison said. “The youth we work with at GoodforMEdia have been advocating for policy and product changes that incorporate principles of safety by design and prioritize the well-being of the users. They want platforms to do more — not less — to protect them from having negative experiences.”